How Generative AI Amplifies Hyper-Realistic Phishing Attacks?

Generative AI has ushered in pathbreaking developments in the technological arena. Minimal human intervention, less time consumption, zero chance of errors– generative AI is gradually becoming the new normal in the workplace, academia, and our households.

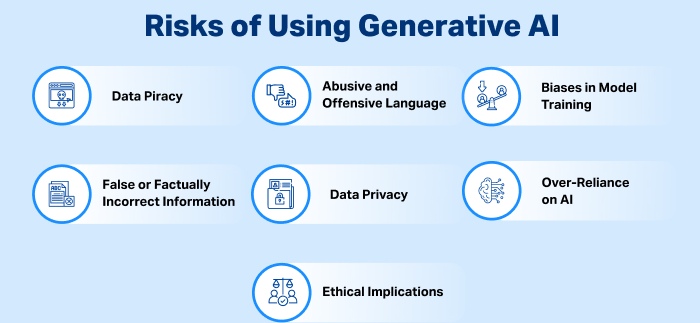

However, just like the two sides of the same coin, this revolutionary technology comes with certain sets of cons.

Security leaders and industry experts around the globe have unanimously raised concern against increasing cyber crimes– thanks to the cunning and strategical usage of generative AI by phishing actors!

Generative AI Facilitates Persuasive Phishing Emails!

The likes of Bard, ChatGPT, etc., have been penetrating into every industry at lightning-fast speed!

Their proliferation has been a blessing in disguise for phishing experts as well,

ChatGPT enables phishing actors to come up with highly convincing and persuasive emails without batting an eyelid.

Generative AI can assist these cybercriminals in churning out emails that look genuine and polished because of the tonality, language, grammatical accuracy, and punctuation. AI tools have made it incredibly easy and accessible for threat actors.

As per IBM X-Force, merely 5 prompts and 5 minutes are enough to generate a compelling phishing email.

Generative AI tools are capable of creating hyper-personalized, razor-focused emails by leveraging all the user information available online publicly.

How Can Generative AI Pose as a Threat to Cybersecurity?

Phishing experts and cyber scammers are evolving at a similar rate as modern technology. They are always on the lookout for the tools and technologies that add to their phishing expertise. Generative AI is one such technology that these threat actors are exploiting to meet their malicious requirements.

As per Darktrace, there has been a significant decline in VIP impersonation. Now, the threat actors prefer impersonating internal IT team members. For this, they leverage generative AI’s deep fake voice and linguistically sophisticated phishing emails.

The HM Government’s report suggests that by 2025, phishing attacks in the form of impersonation, malicious emails, frauds, child sexual abuse images, etc., will be at their peak- all credits to generative AI. They believe that with enhanced usage of generative AI, the threat will permeate into the socio-political spheres as well.

Image sourced from darwinbox.com

How to Prevent Cyber Frauds and Protect Your Sensitive Data from Generative AI-Backed Phishing Actors?

Despite the surge in security threats because of the widespread proliferation of generative AI, security leaders have not yet geared up with adept strategies. They are still combatting high-tech phishing emails with conventional defense mechanisms such as legacy tools and cloud email services.

Here’s what every consumer and business must do to combat social engineering attacks powered by generative AI:

Keep an eye on grammatical sophistication.

No, it is no longer necessary that phishing emails will be replete with grammatical errors. With generative AI being so easily accessible, malicious emails are getting all the more urbane and trailblazing.

Grammar is no longer a red flag that will help you identify a scammer. Start educating yourself and your team about other aspects, such as the length of the email content, its intricacy, and so on.

The longer and more bookish the content is, the greater its chances of being a malicious one!

Get in touch over a call with the sender.

Feeling doubtful? Call the sender straight away! This will instantly help you establish the legitimacy of the email.

Use a “password” or “safe word,” preferably among your close friends and family, to avoid phone scams backed by AI deep fake voice technology.

Power up your identity and access management controls.

Using advanced identity and access management controls ensures that all the sensitive data stays safe. They are accessible by only the entitled members. No threat actor can bypass these high-end management controls without ruffling a few feathers.

Adaptability and upskilling is the key!

Threat actors are always on their toes to hone their phishing skills and scamming tactics. In order to avoid instances of fraud and scams, it is necessary that you stay updated with the latest AI developments and trends. Also, keep investing in your threat detection systems and employee training programs so that you can fight phishing threats conveniently.

AI- A Boon or Bane?

The answer lies in the adaptability and usage of AI. When leveraged right, defensive AI can make your defense mechanism phishing-proof. It should be an integral part of conventional cybersecurity systems. It has the ability to analyze and understand the communication patterns within organizations. In fact, embracing defensive AI is a must to tackle the potential threats posed by phishing actors.

We can also deploy optimal solutions for email security such as SPF, DKIM, DMARC, and BIMI to shield against phishing emails. As human beings, we are responsible for striking the right balance between security threats and technological developments by using AI judiciously.